Beam: Better Decisions, Lower Risk, with Multi-Model AI Reasoning

April 2, 2024

Enrico Ros

Update: thanks for the strong response to Beam over the weekend. We updated this post on April 7 in relation to the great "More Agents Is All You Need" paper.

Introduction

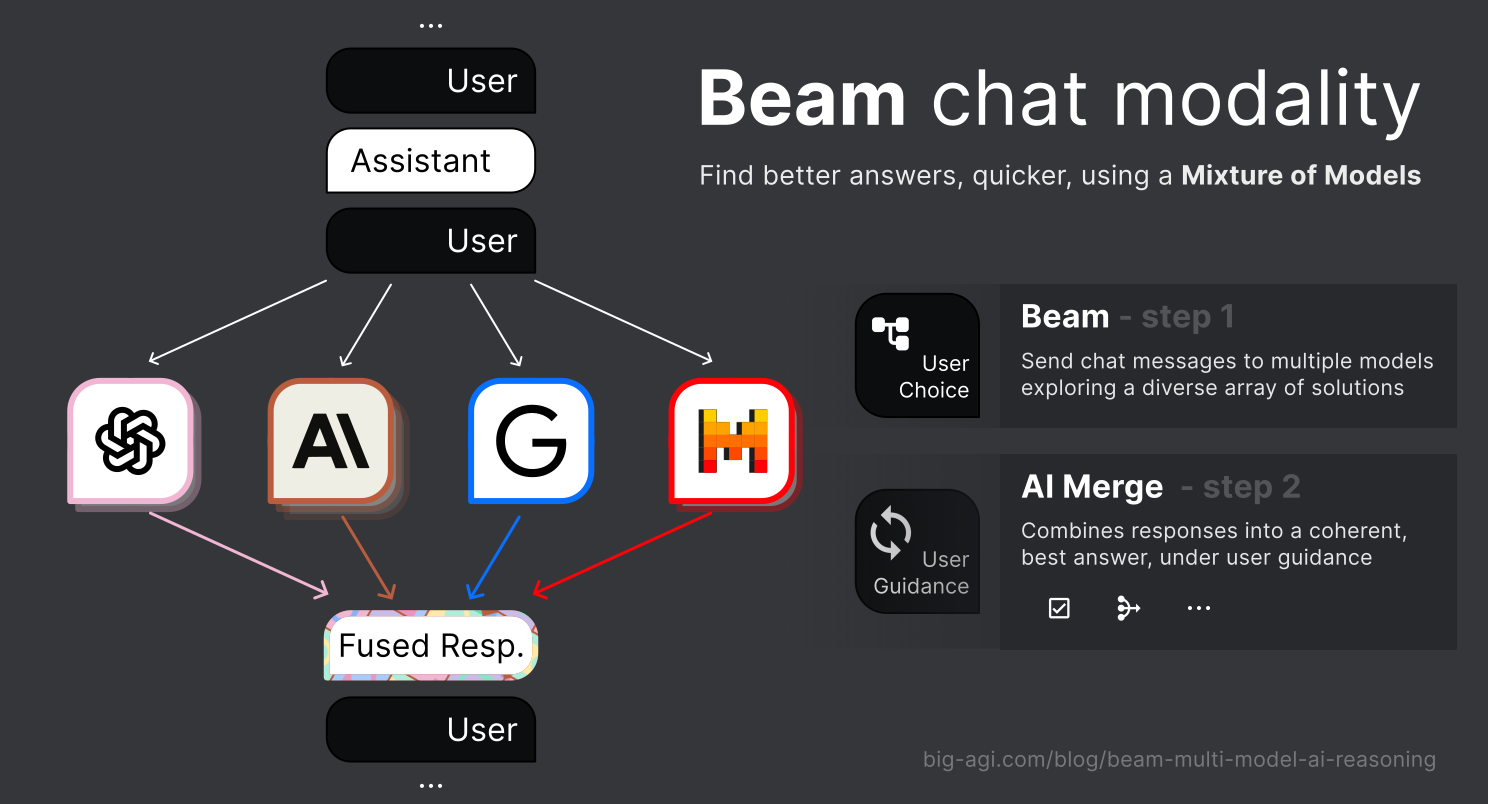

As users become more familiar with AI language models, the limitations of the models can become increasingly apparent and frustrating, leading to a desire for more intelligent and controllable AI interactions. Beam, a groundbreaking chat modality in big-AGI, addresses this need by enabling users to easily engage multiple models simultaneously, fuse their best responses, and achieve better output, wiser decisions with lower risk and reduced AI hallucinations.

What is Beam?

Beam is a new chat modality in big-AGI that leverages multiple AI models from diverse families simultaneously. Users Beam a chat message for:

- better brainstorming and idea generation abilities

- more informed decisions, with more breadth of exploration

- to dilute and reduce the incidence of LLM hallucinations

- higher-stake outputs that far exceed cost tradeoffs

When engaging in a Beam chat the user sends a message to Beam (Ctrl + Enter) instead of just a single LLM. This is represented in the diagram below.

Beam generates responses from user-selected models independently, then synthesizes them using advanced merging techniques to produce a single, coherent and comprehensive output.

Achieve next-gen LLM performance with today's models.

Andrew Ng, a recognized leader in AI, stated that "[...] you may be able to get closer to (GPT-5) level of performance with agentic reasoning and an earlier model".

Recently, the "More Agents Is All You Need" paper has measured model performance improvements when running multiple instances of the same model. Similarly to Beam, both techniques involve a sampling and a voting phase, however Beam goes further by using AI to analyze and fuse the best part of each answer, and by using diverse model families altogether, which provides stronger diversification benefits.

Beam shows that it is possible to exceed today's level of performance of GPT models, and its design will likely allow to always stay ahead. Beam is designed with principles of human guidance for system stability, parallelism for time-saving, dynamic interfaces for bird's-eye-view decision-making, and orthogonality to maximize the strengths of diverse LLM families, effectively bringing "The Wisdom of Crowds" to the LLM world.

- "This is really a big deal and it yields better results than the current leading models" - Discord

- "This is incredible. I can't praise the Devs enough for releasing this tech." - Discord

- "It eliminates a huge number of hallucinations and bad answers." - Reddit

Technology

We illustrate the technology with a special kind of text generation: Code. Language models text outputs tend to be verbose, too persuasive, and too singular and time consuming to compare in an article. Thus, we requested HTML/CSS code, which makes it for an easier at-a-glance evaluation. A short video example here.

The exploration: Beaming

Big-AGI allows you to Beam your chat message to multiple models. You can also refine and improve your conversation history by Beaming previous messages. This phase alone allows:

- Brainstormers to explore a wider range of ideas and perspectives, sparking creativity and innovation

- Researchers to gather more comprehensive information, ensuring no stone is left unturned, by tapping into the collective knowledge of various AI engines

To illustrate the power of beaming, let's consider a code example. We asked different LLM families: OpenAI, Anthropic, Google and Mistral, to "Make a cool looking capybara shape animation using css in an html file".

Beaming - the responses generated by the AI models vary in their effectiveness: the OpenAI and Google models fail to resemble a capybara, while the Mistral model, though interesting and eye-catchy, misses the mark. The Anthropic Claude 3 Opus model is the closest but still needs refinement.

The first phase of Beam is a UX feature that allows the user to quickly gather a diverse set of relevant responses without any intelligent analysis, yet. In this initial phase, the user can easily probe multiple models independently, as many times as they want, to explore the solution space and narrow it down to a set of relevant options.

Beam's side-by-side comparison already allows for quick evaluation of each model's output, whether it's a legal document, code or a story, saving time and effort in identifying the most promising starting points for further development.

The secret: Fusions

The power of Beam lies in its ability to fuse the disparate responses from multiple LLM into a cohesive, optimized answer that leverages the best of each. This is where the second phase of Beam comes into play, Fusions. In the Fusions phase, Beam uses LLMs to analyze the generated responses, identify their key components, and intelligently combine them to create a unified, superior answer. Fusion enables:

- Decision-makers to arrive at a clear, well-justified course of action, by synthesizing the most relevant and insightful elements of each answer.

- Coders to get to a more robust, optimized solution, by leveraging the strengths of different AI models and filtering out the noise.

At the introduction, four options are available for this phase:

- Fusion auto-selects the optimal parts of all answers to satisfy the original user's query.

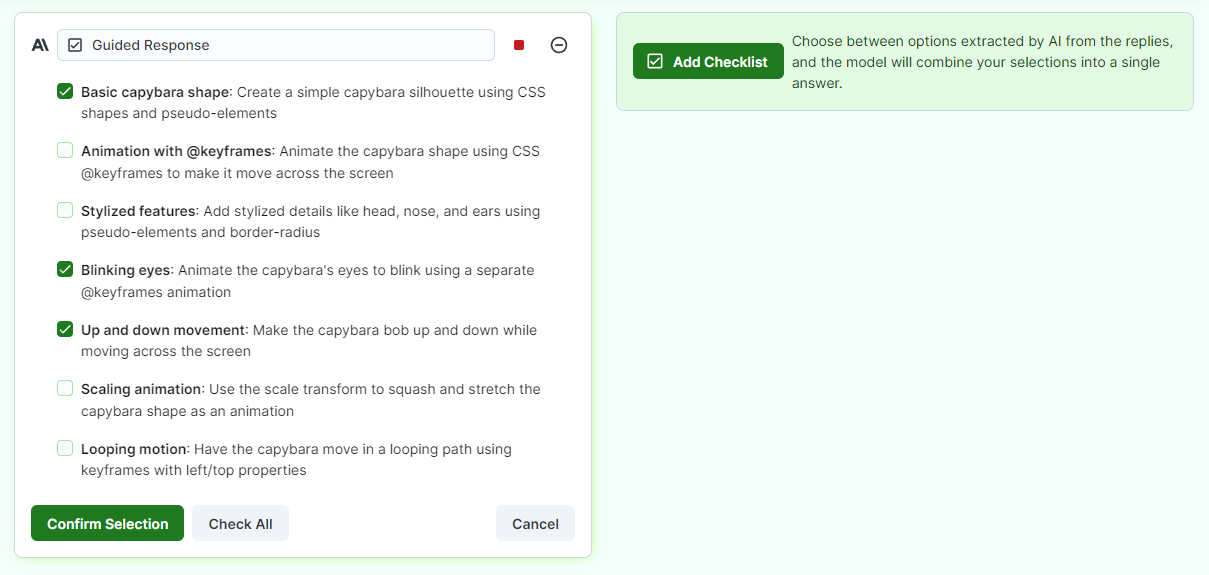

- Checklist lets the user choose the merge strategy after identifying the orthogonal components of the answers.

- Compare helps the user decide which AI-generated could be closer to their needs by creating a comparison table.

- Custom uses a user-decided merge strategy to amalgamate in a specific manner.

To showcase the power of fusions, let's apply two Fusion Merges to the earlier four AI-generated animations.

Fusion Merge - asking two different models to fuse the 4 AI-generated responses. Notice how both converged to a more 'cool' (as requested by the user) result than any of the starting options.

In this example, both the Claude 3 Opus (left) and OpenAI GPT-4 Turbo (0125) models have successfully synthesized a more visually appealing and coherent animation than four starting AI-generated options. The merged animations are more visually appealing and closely resemble the desired user outcome.

What this means for the user, is that they can now go beyond what's possible with the best available single model, which is particularly useful for high-stakes chat sessions.

When more is at stake, such as when writing a legal document, planning a vacation, or conceiving a complex software architecture, you now have the ability to harness the collective intelligence, leveraging the proverbial Wisdom of Crowds.

This is a groundbreaking step forward in the field of AI interactions, and something we believe every chat should implement for their users.

The extra kicker: Guided merge

The Guided merge provides the user with a simple check-list to choose the directions in which to move the search for the perfect answer.

This merge breaks down all all the responses into their principal orthogonal components, which is as intellectually challenging as illuminating.

Checklist Merge - the user is presented with key insights from the analysis of all the AI-generated answers. In this particular case the user chooses to combine answers according to specific criteria: "Basic capybara shape", "Blinking eyes", and "Up and down movement".

This merge is fascinating as it brings principal component analysis from the realm of statistics into linguistics, but we will zoom in on those at an appropriate time and blog.

For the user this means that they have an additional tool to enrich their understanding about what are the basic component that matter in the answer they are searching.

This is a novel AI interaction, and a leap into a future where the user points the AI in a precise direction at each new step.

Conclusions

Beam enables users to achieve next-gen LLM performance with today's models. By leveraging diverse LLMs and advanced merging techniques, Beam gets you to better answers faster. Whether you're brainstorming, researching, making decisions, or coding, Beam enhances your workflow and takes your results to the next level.

Beam is available today: explore diverse model combinations, master the merges, and refine your approach based on insights gained. With Beam as your copilot, you'll push beyond what's possible with today's AI models with speed, ease and precision, arriving at the best possible answers.

Experience Beam on big-AGI.com, explore the code on GitHub, join us on Discord, or get in touch at hello@big-agi.com, and let's create AI experiences that drive meaningful change.